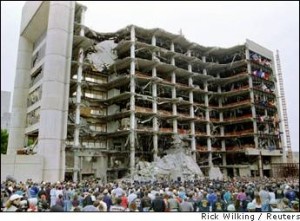

Twenty Years Later: Facts About the Oklahoma Bombing That Go Unreported

There will be less discussion about how the FBI spent years hunting for a man who witnesses say accompanied McVeigh on the day of the bombing. They called this accomplice John Doe #2 and theories about his identity range from an Iraqi named Hussain Al-Hussaini, to a German national described below, to a neo-nazi bank robber named Richard Guthrie. The Justice Department finally gave up its search and said it was all a mistake— that there was never any credible evidence of a John Doe #2 being involved.

That reversal demonstrates a pattern of cover-up by authorities and limited media coverage in the years since the crime. This week, accounts will not repeat early reports of secondary devices in the building, or reports of the involvement of unknown middle-eastern characters. There will also be little if any mention of the extensive independent investigation into the crime that was conducted by leading members of the OKC community. Here are seven more facts that will probably not see much coverage on the 20thanniversary.

- Attorney Jesse Trentadue began investigating the case after his brother Kenney was killed in prison, apparently having been tortured to death by the FBI in its search for John Doe #2. Trentadue’s investigation led to a federal judge nearly finding the FBI in contempt of court for tampering with a key witness. Trentadue now says, “There’s no doubt in my mind, and it’s proven beyond any doubt, that the FBI knew that the bombing was going to take place months before it happened, and they didn’t stop it.”

- Judge Clark Waddoups, who presided over the case brought by Jesse Trentadue, ruled in 2010 that CIA documents associated with the case must be held secret. These documents show that the CIA was involved in the OKC bombing investigation and the prosecution of McVeigh. This means that foreign parties were involved because the CIA is prohibited from interfering in purely domestic investigations.

- Andreas Strassmeir, a former German military officer, was suspected of being John Doe #2. Strassmeir became close friends with McVeigh and they were both associated with a neo-nazi organization located in Elohim City, OK. A retired U.S. intelligence official claimed that Strassmeir was “working for the German government and the FBI” while at Elohim City. Mainstream reports about the OKC bombing typically avoid reference to Strassmeir.

- Larry Potts was the FBI supervisor who was responsible for the tragedies at Ruby Ridge in 1992, and Waco in 1993. Potts was then given responsibility for investigating the OKC bombing. Terry Nichols claimed that McVeigh—who allegedly had been recruited as an undercover intelligence asset while in the Army—had been working under the supervision of Potts.

- Terry Yeakey, an officer of the OKC Police Department, was among the first to reach the scene and he was heralded as a hero for rescuing many victims. Yeakey was also an eyewitness to conversations and physical evidence that convinced him that there was a cover-up of the bombing by federal agents. Yeakey was committed to getting to the truth about what happened but a year after the bombing he was found dead off the side of a rural road. His death was ruled a suicide despite overwhelming evidence that he was murdered. Authorities reported that Yeakey, “slit his wrists and neck… then miraculously climbed over a barbed wire fence… walked over a mile’s distance, through a nearby field, and eventually shot himself in the side of the head at an unusual angle.” No weapon was found, no investigation was conducted, no fingerprints were taken, and no interviews were conducted. His family continues to fight for the truth about his death.

- Gene Corley, the engineer who was hired by the government to support its claims about the structural fire at the Branch Davidian complex in Waco, was brought in to investigate the destruction of the Murrah Building. Corley brought along three other engineers: Charles Thornton, Mete Sozen, and Paul Mlakar. Their investigation was conducted from half a block away—where they could not observe any of the damage directly—yet their conclusions supported the pre-existing official account. A few years later, within 72 hours of the 9/11 attacks, these same four men were on site leading the investigations at the Word Trade Center and the Pentagon.

- There are many other links between OKC and 9/11. For example, the alleged hijackers visited the OKC area many times and even stayed in the same motel that was frequented by McVeigh and Nichols. After both the OKC bombing and 9/11, building monitoring videos went missing, FBI harassment of witnesses was seen, and officials ignored evidence that did not support the political story. Additionally, numerous oddities link the OKC area to al Qaeda. In 2002, OKC resident Nick Berg was interrogated by the FBI for lending his laptop and internet password to alleged “20thhijacker” Zacarias Moussoui. Two years after this interrogation, Berg became world famous as a victim of beheading in Iraq. Investigators looking for clues about these connections will be particularly interested in two airports in OKC, the president of the University of Oklahoma, and the CIA leader who both monitored the alleged hijackers in Germany and was hired at the university just before 9/11.

Kevin Ryan blogs at Dig Within.