How today’s touchscreen tech put the world at our fingertips

The technology grew up, then fundamentally changed how we interact with devices.

When you think about touchscreens today, you probably think about smartphones and tablets, and for good reason. The 2007 introduction of the iPhone kicked off a transformation that turned a couple of niche products—smartphones and tablets—into billion-dollar industries. The current fierce competition from software like Android and Windows Phone (as well as hardware makers like Samsung and a host of others) means that new products are being introduced at a frantic pace.

The screens themselves are just one of the driving forces that makes these devices possible (and successful). Ever-smaller, ever-faster chips allow a phone to do things only a heavy-duty desktop could do just a decade or so ago, something we've discussed in detail elsewhere. The software that powers these devices is more important, though. Where older tablets and PDAs required a stylus or interaction with a cramped physical keyboard or trackball to use, mobile software has adapted to be better suited to humans' native pointing device—the larger, clumsier, but much more convenient finger.

The foundation: capacitive multitouch

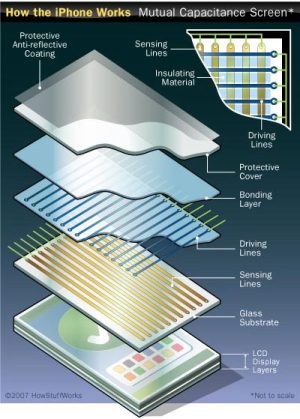

Enlarge / Many layers come together to form a delicious touchscreen sandwich.

We discussed some early capacitive touchscreens in our last piece, but the modern capacitive touchscreen as used in your phone or tablet is a bit different in its construction. It is composed of several layers: on the top, you've got a layer of plastic or glass meant to cover up the rest of the assembly. This layer is normally made out of something thin and scratch-resistant, like Corning's Gorilla Glass, to help your phone survive a ride in your pocket with your keys and come out unscathed. Underneath this is a capacitive layer that conducts a very small amount of electricity, which is layered on top of another, thinner layer of glass. Underneath all of this is the LCD panel itself. When your finger, a natural electrical conductor, touches the screen, it interferes with the capacitive layer's electrical field. That data is passed to a controller chip that registers the location (and, often, pressure) of the touch and tells the operating system to respond accordingly.

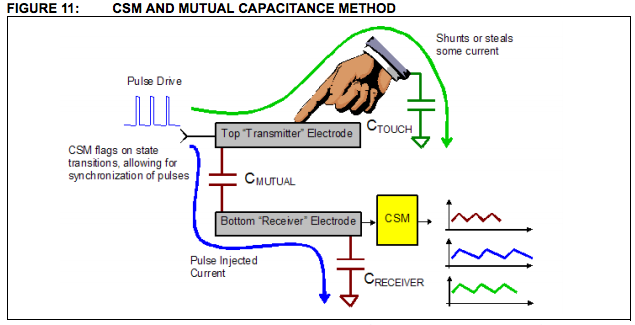

This arrangement by itself can only accurately detect one touch point

at a time—try to touch the screen in two different locations and the

controller will interpret the location of the touch incorrectly or not

at all. To register multiple distinct touch points, the capacitive layer

needs to include two separate layers—one using "transmitter" electrodes

and one using "receiver" electrodes. These lines of electrodes run

perpendicular to each other and form a grid over the device's screen.

When your finger touches the screen, it interferes with the electric

signal between the transmitter and receiver electrodes.

This arrangement by itself can only accurately detect one touch point

at a time—try to touch the screen in two different locations and the

controller will interpret the location of the touch incorrectly or not

at all. To register multiple distinct touch points, the capacitive layer

needs to include two separate layers—one using "transmitter" electrodes

and one using "receiver" electrodes. These lines of electrodes run

perpendicular to each other and form a grid over the device's screen.

When your finger touches the screen, it interferes with the electric

signal between the transmitter and receiver electrodes.

When

your finger, a conductor of electricity, touches the screen, it

interferes with the electric field that the transmitter electrodes are

sending to the receiver electrodes, which registers to the device as a

"touch."

These basic building blocks are still at the foundation of smartphones, tablets, and touch-enabled PCs now, but the technology has evolved and improved steadily since the first modern smartphones were introduced. Special screen coatings, sometimes called "oleophobic" (or, literally, afraid of oil), have been added to the top glass layer to help screens resist fingerprints and smudges. These even make the smudges that do blight your screen a bit easier to wipe off. Corning has released two new updates to its original Gorilla Glass concept that have made the glass layer thinner while increasing its scratch-resistance. Finally, "in-cell" technology has embedded the capacitive touch layer in the LCD itself, further reducing the overall thickness and complexity of the screens.

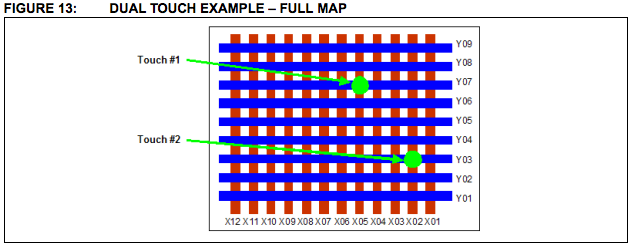

Using the coordinates from this grid of electrodes, the device can accurately detect the location of multiple touches at once.

Let your fingers do the walking: Designing software for the human hand

Enlarge / Touch software trades menus, checkboxes, and cursors for big, tappable buttons.

Let's step back to 2001 or so, when Microsoft first introduced its concept of the "Tablet PC." These early tablets ran a version of Windows XP Professional called the "Tablet PC Edition." It supported pen input and came with a small suite of applications for handwritten notes and other pen-centric tasks.

Despite these additions, the Tablet PC Edition of Windows XP was very much Windows XP with a few new pieces laid on top of it. The pens could be acceptable pointing devices (subject to the quality and accuracy of the touchscreen), but the vast majority of the operating system and the applications that ran on it were designed with the far-more-prevalent keyboard and mouse in mind. (Microsoft Office 2013 is a more recent example of software that was designed for a mouse and keyboard first.) This isn't necessarily a bad thing, but it does make it much more difficult to use with a touchscreen.

Modern operating systems with a touch-first approach are more finger-friendly in two important ways: responsiveness and size.

When we say "responsiveness," we're not only talking about an operating system that responds quickly and accurately to input, though that's certainly very important. When you touch something in the real world, it reacts to your touch immediately, and the operating systems that feel the best to use are the ones that act the same way. Windows Phone makes a point of running smoothly even on low-end hardware, to make sure that its phones feel satisfying whether they cost $200 or were free with a contract. But consistency is also an important element of responsiveness. Android's "Jelly Bean" update included "Project Butter," which focused on making the operating system run at 60 frames-per-second as often as possible. This was intended to improve the sometimes-inconsistent performance of older Android versions.

Enlarge /

Some apps use tricks to make hard-to-tap elements a bit easier to

interact with. Chrome for Android will pop up a helpful magnifying-glass

style box when you attempt to tap in an area with several links grouped

close together, for instance.

The size of onscreen elements is also crucial to a good touch experience—fingers aren't precise enough to easily deal with a bunch of nested menus, checkboxes, and buttons like Windows or OS X use. Instead, you often have to sacrifice information density in favor of making your UI larger. Most touch operating systems have some general guidelines to follow when developing touch applications, but Apple's are well-defined and simple to explain, so we'll use iOS as an example.

Apple's Human Interface Guidelines for iOS define "the comfortable minimum size of a tappable UI element" as being 44 by 44 "touch points" large. On non-Retina displays like the iPad mini or iPhone 3GS, a "touch point" and a pixel are the same size; on a Retina display like the iPad 4 or iPhone 5, one touch point is one 2×2 square of pixels (since a Retina display has four times as many pixels as a non-Retina display). On a phone, the physical size of one of these tappable elements is about 0.38" (or 9.7mm) large, while on an iPad it's about 0.47" (11.9mm) large. Developers are free to use larger or smaller elements (many of Apple's own buttons are smaller), but these measurements exist to give developers a general idea of how much space you need to prevent accidental button presses, mis-registered touches, and anything else the user didn't intend to do.

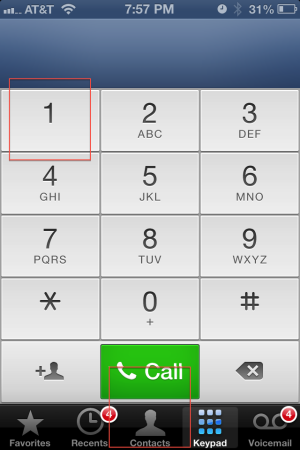

Enlarge /

The red squares laid over top of the iPhone's dialer app represent

Apple's minimum recommended size for tappable onscreen elements. Even

Apple doesn't follow these to the letter (the buttons at the bottom of

the screen in particular are a bit smaller than recommended), but

they're still useful sizes to keep in mind.

As this software has matured and people have become more used to it,

newer phones and tablets have become even more button-averse. Stock

Android switched out its hardware buttons for onscreen software buttons

(which, like software keyboards, are context-sensitive and can change

based on the application you're running). Windows 8 and Windows RT

tablets still retain a Windows button, but they rely on gestures and

swipes from the edge of the screen. The BlackBerry Z10

does away with even a home button, relying entirely on gestures for not

just OS navigation, but also for waking the phone from sleep.

As this software has matured and people have become more used to it,

newer phones and tablets have become even more button-averse. Stock

Android switched out its hardware buttons for onscreen software buttons

(which, like software keyboards, are context-sensitive and can change

based on the application you're running). Windows 8 and Windows RT

tablets still retain a Windows button, but they rely on gestures and

swipes from the edge of the screen. The BlackBerry Z10

does away with even a home button, relying entirely on gestures for not

just OS navigation, but also for waking the phone from sleep.Look around you—touch is everywhere

Enlarge /

The best touch-enabled Ultrabooks (like the Dell XPS 12 pictured here)

include touch capability without getting in the way of the standard PC

functions.

For PCs, Windows 8 has given rise to a new class of convertible laptops that try to serve the needs of both touch users and Windows desktop users. These experiments haven't always worked out, but the best of them—designs like Lenovo's IdeaPad Yoga and Dell's XPS 12—add touch capabilities without diminishing the devices' utility as regular laptops. Sony's PlayStation Vita, its forthcoming PlayStation 4, and Nintendo's Wii U all also use touchscreens as a secondary means of input in addition to the respective consoles' signature controllers. All of these devices show that touch can be additive as well as disruptive—it can improve already good devices just as readily as it replaced the ungainly smartphones and tablets of the early-to-mid 2000s.

Other experiments on the PC side of the fence attempt to define completely new products. PCs like Sony's Tap 20, Lenovo's IdeaCentre Horizon 27, and Asus' Transformer AiO all combine a traditional all-in-one PC with a large touchscreen that multiple people can gather around at once. This seems especially conducive to entertainment and education—multiplayer games and collaborative exercises are commonly touted as selling points for these big new screens.

Enlarge / Big touchscreens like the one on Sony's Tap 20 open the door to multiple simultaneous users.

Touch seems equally likely to move down into smaller screens. More

than one company is rumored to be working on a "smart watch" to augment

(or even replace select functions of) your smartphone. It's a fair bet

that if these products come to pass, more than one of them will work

touchscreens in somehow (though as the Pebble watch shows, they can also work reasonably well without touch). If you owned one of the small, square sixth-generation iPod Nanos,

you may have already gotten a glimpse into this future. These devices

had touchscreens, a limited selection of apps, and were easy to wear

around your wrist in place of a watch, provided you had the right strap.

Touch seems equally likely to move down into smaller screens. More

than one company is rumored to be working on a "smart watch" to augment

(or even replace select functions of) your smartphone. It's a fair bet

that if these products come to pass, more than one of them will work

touchscreens in somehow (though as the Pebble watch shows, they can also work reasonably well without touch). If you owned one of the small, square sixth-generation iPod Nanos,

you may have already gotten a glimpse into this future. These devices

had touchscreens, a limited selection of apps, and were easy to wear

around your wrist in place of a watch, provided you had the right strap.

Tiny touchscreens like the sixth-generation iPod Nano could suggest a direction for future smart watches.

No comments:

Post a Comment