And Now for the Robot Apocalypse…

by James Corbett

by James CorbettTheInternationalForecaster.com

July 28, 2015

Well, you can’t blame them for trying, can you?

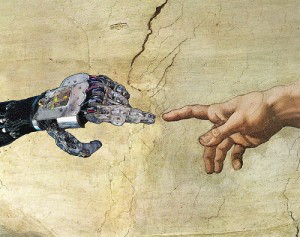

Earlier today the grandiloquently named “Future of Life Institute” (FLI) announced an open letter on the subject of ‘autonomous weapons.’ In case you’re not keeping up with artificial intelligence research, that means weapons that seek and engage targets all by themselves. While this sounds fanciful to the uninformed, it is in fact a dystopian nightmare that, thanks to startling innovations in robotics and artificial intelligence by various DARPA-connected research projects, is fast becoming a reality. Heck, people are already customizing their own multirotor drones to fire handguns; just slap some AI on that and call it Skynet.

Indeed, as anyone who has seen Robocop, Terminator, Bladerunner or a billion other sci-fi fantasies will know, gun-wielding, self-directed robots are not to be hailed as just another rung on the ladder of technical progress. But for those who are still confused on this matter, the FLI open letter helpfully elaborates: “Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group.” In other words, instead of “autonomous weapons” we might get the point across more clearly if we just call them for what they are: soulless killing machines. (But then we might risk confusing them with the psychopaths at the RAND Corporation or the psychopaths on the Joint Chiefs of Staff or the psychopaths in the CIA or the psychopaths in the White House…)

In order to confront this pending apocalypse, the fearless men and women at the FLI have bravely stepped up to the plate and…written a polite letter to ask governments to think twice before developing these really effective, well-nigh unstoppable super weapons (pretty please). Well, as I say, you can’t blame them for trying, can you?

Well, yes. Actually you can. Not only is the letter a futile attempt to stop the psychopaths in charge from developing a better killing implement, it is a deliberate whitewashing of the problem.

According to FLI, the idea isn’t scary in and of itself, it isn’t scary because of the documented history of the warmongering politicians in the US and the other NATO countries, it isn’t scary because governments murdering their own citizens was the leading cause of unnatural death in the 20th century. No, it’s scary because “It will only be a matter of time until [autonomous weapons] appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc.” If you thought the hysteria over Iran’s nuclear non-weapons program was off the charts, you ain’t seen nothing yet. Just wait till the neo-neocons get to claim that Assad or Putin or the enemy of the week is developing autonomous weapons!

In

fact, the FLI doesn’t want to stop the deployment of AI on the

battlefield at all. Quite the contrary. “There are many ways in which AI

can make battlefields safer for humans” the letter says before adding

that “AI has great potential to benefit humanity in many ways, and that

the goal of the field should be to do so.” In fact, they’ve helpfully

drafted a list of research priorities

for study into the field of AI on the assumption that AI will be woven

into the fabric of our society in the near future, from driverless cars

and robots in the workforce to, yes, autonomous weapons.

In

fact, the FLI doesn’t want to stop the deployment of AI on the

battlefield at all. Quite the contrary. “There are many ways in which AI

can make battlefields safer for humans” the letter says before adding

that “AI has great potential to benefit humanity in many ways, and that

the goal of the field should be to do so.” In fact, they’ve helpfully

drafted a list of research priorities

for study into the field of AI on the assumption that AI will be woven

into the fabric of our society in the near future, from driverless cars

and robots in the workforce to, yes, autonomous weapons.So who is FLI and who signed this open letter. Oh, just Stephen Hawking, Elon Musk, Nick Bostrom and a host of Silicon Valley royalty and academic bigwigs. Naturally the letter is being plastered all over the media this week in what seems suspiciously like an advertising campaign for the machine takeover, with Bill Gates and Stephen Hawking and Elon Musk having already broached the subject in the past year, as well as the Channel Four drama Humans and a whole host of other cultural programming coming along to subtly indoctrinate us that this robot-dominated future is an inevitability. This includes extensive coverage of this topic in the MSM, including copious reports in outlets like The Guardian telling us how AI is going to merge with the “Internet of Things.” But don’t worry; it’s mostly harmless.

…or so they want us to believe. Of course what they don’t want to talk about in great detail is the nightmare vision of the technocractic agenda that these technologies (or their forerunners) are enabling and the transhumanist nightmare that this is ultimately leading us toward. That conversation is reserved for proponents of the Singularity like Ray Kurzweil and any attempts to point out the obvious problems with this idea are poo-pooed as “conspiracy theory.”

And

so we have suspect organizations like the “Future of Life Institute”

trying to steer the conversation on AI into how we can safely integrate

these potentially killer robots into our future society even as the

Hollywood programmers go overboard in steeping us in the idea.

Meanwhile, those of us in the reality-based community get to watch this

grand uncontrolled experiment with the future of our world unfold like

the genetic engineering experiment and the geoengineering experiment.

And

so we have suspect organizations like the “Future of Life Institute”

trying to steer the conversation on AI into how we can safely integrate

these potentially killer robots into our future society even as the

Hollywood programmers go overboard in steeping us in the idea.

Meanwhile, those of us in the reality-based community get to watch this

grand uncontrolled experiment with the future of our world unfold like

the genetic engineering experiment and the geoengineering experiment.What can be done about this AI / transhumanist / technocratic agenda? Is it possible to be derailed? Contained? Stopped altogether? How?

No comments:

Post a Comment