Ars tours Facebook's DIY-hardware lab to learn why it embraces open source hardware.

A test rack in Facebook's hardware electrical test lab filled with Facebook DIY hardware.

Sean Gallagher

MENLO PARK, CA—Building 17 of Facebook's headquarters sits on what

was once a Sun Microsystems campus known fondly as "Sun Quentin." It now

houses a team of Facebook engineers in the company's electrical lab.

Everyday, they push forward the company vision of how data center

hardware should be built. These engineers constantly bench-test designs

for their built-in-house server hardware—essentially putting an end to

server hardware as we know it.

MENLO PARK, CA—Building 17 of Facebook's headquarters sits on what

was once a Sun Microsystems campus known fondly as "Sun Quentin." It now

houses a team of Facebook engineers in the company's electrical lab.

Everyday, they push forward the company vision of how data center

hardware should be built. These engineers constantly bench-test designs

for their built-in-house server hardware—essentially putting an end to

server hardware as we know it.Ars recently visited Facebook's campus to get a tour of the server lab from Senior Manager of Hardware Engineering Matt Corddry, leader of Facebook's server hardware design team. What's happening at Facebook's lab isn't just affecting the company's data centers, it's part of Facebook's contribution to the Open Compute Project (OCP), an effort that hopes to bring open-source design to data center server and storage hardware, infrastructure, and management interfaces across the world.

Facebook, Amazon, and Google are all very picky about their server hardware, and these tech giants mostly build it themselves from commodity components. Frank Frankovsky, VP of hardware design and supply chain operations at Facebook, was instrumental in launching the Open Compute Project because he saw the waste in big cloud players reinventing things they could share. Frankovsky felt that bringing the open-source approach Facebook has followed for software to the hardware side could save the company and others millions—both in direct hardware costs and in maintenance and power costs.

Just as the Raspberry Pi system-on-a-board and the Arduino open-source microcontroller have captured the imagination of small-scale hardware hackers, OCP is aimed at making DIY easier, effective, and flexible at a macro scale. What Facebook and Open Compute are doing to data center hardware may not ultimately kill the hardware industry, but it will certainly tilt it on its head. Yes, the open-sourced, commoditized motherboards and other subsystems used by Facebook were originally designed specifically for the "hyper scale" world of data centers like those of Facebook, Rackspace, and other cloud computing providers. But these designs could easily find their way into other do-it-yourself hardware environments or into "vanity free" systems sold to small and large enterprises, much as Linux has.

And open-source commodity hardware could make an impact beyond its original audience quickly because it can be freely adopted by hardware makers, driving down the price of new systems. That's not necessarily good news for Hewlett-Packard, Cisco, and other big players in corporate IT. "Vanity free," open-source designed systems will likely drive innovation fast while disrupting the whole model those companies have been built upon.

“Open” as in “open-minded”

To be clear, Open Compute doesn't go open-source all the way down to the CPU. Even the Raspberry Pi isn't based on open-source hardware because there's no open-designed silicon that is capable enough (and manufacturers aren't willing to produce one in volume for economic reasons). The OCP hardware designs are "open" at a higher level. This way anyone can use standards-based components to create the motherboards, the chassis, the rack-mountings, the racks, and the other components that make up servers. "We focus on the simplest design possible," Corddry told me. "It's

focused really tightly on really high scalability and driving out

complexity and any glamor or vanity in the design." That makes it easy

to maintain, cheap to buy and build, and simple to adapt to new problems

as they emerge. It also makes it easy to build things on top of the

designs that will help Facebook and others who buy into the OCP

philosophy. The ideas that came out of the OCP Hardware Hackathon at the Facebook campus on June 18 are a primary example.

"We focus on the simplest design possible," Corddry told me. "It's

focused really tightly on really high scalability and driving out

complexity and any glamor or vanity in the design." That makes it easy

to maintain, cheap to buy and build, and simple to adapt to new problems

as they emerge. It also makes it easy to build things on top of the

designs that will help Facebook and others who buy into the OCP

philosophy. The ideas that came out of the OCP Hardware Hackathon at the Facebook campus on June 18 are a primary example.So far, Facebook and Rackspace are the main adopters of OCP hardware. But that could soon change as the dynamics of open-source hardware start to kick in. As Intel, AMD, and others start to turn out more components built to the OCP specification and contribute more intellectual property to the initiative, some involved with the effort believe it will snowball.

"I think our industry realized probably about a decade ago, when Linux took over for Unix, that open was actually a pretty positive thing for the suppliers as well as the consumers in large-scale computing," Corddry said. "Linux didn't kill the data center industry or the OS industry. I think we're looking at the same pattern and seeing that openness and hardware doesn't mean the death of hardware. It probably means the rebirth of hardware, where we see a greater pace of innovation because we're not always reinventing the wheel."

Deconstructing the server

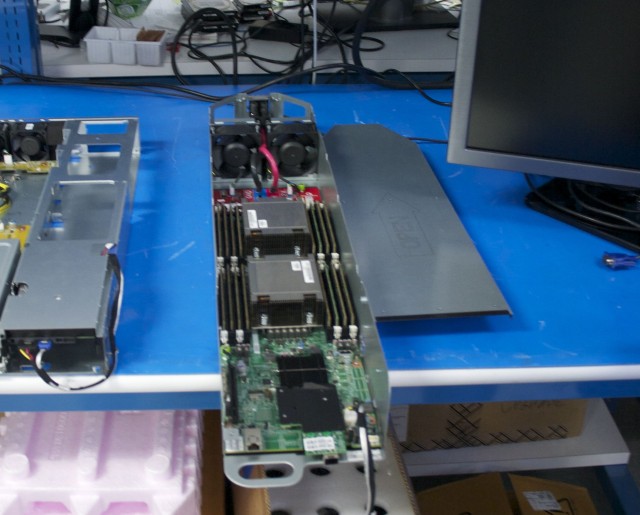

Enlarge /

Facebook's Senior Manager of Hardware Engineering Matt Corddry shows

off some of the "sled" servers designed and built by Facebook.

Sean Gallagher

The design principles behind Facebook's hardware come from direct hands-on experience. Corddry said that all his engineers spend time working as technicians at Facebook data centers "so everyone walks a mile in those shoes and understands what it is to work on this gear at scale."

The approach taken by Facebook and by the Open Compute Project is post-modernist deconstruction for the data center—the disaggregation of the components that usually make up a server into functional components with as little complexity and as much efficiency to them as possible. There are few "servers" per se in Facebook's data center architecture. Instead, there are racks filled with "sleds" of functionality. "That's going to be a pattern you see from us over the next couple of years," Corddry said while showing off a few sled designs in the hardware lab. "A lot of our hardware designs will be focused on one class of problem."

The approach is already being rolled out in Facebook's newest data centers, where racks are filled with systems built from general-purpose compute sleds (motherboards populated with CPUs, memory, and PCI cards for specific tasks), storage sleds (high-density disk arrays), and "memory sleds" (systems with large quantities of RAM and low-power processors designed for handling large in-memory indexes and databases).

"We're not putting these into the network sites yet," Corddry said, referring to the colocation sites Facebook uses to connect to major Internet peering sites. "But we're putting them in all our data center facilities, including Ashburn, Virginia—where it's not a net new build, it's more of a classic data center environment. In fact, we've designed a variant of the original Open racks to go into that kind of facility that has the standard dual power so they can play nice with the colo environment."

The one thing all of these disaggregated modules of hardware have in common is that they're fully self-contained and can be yanked out, repaired, or replaced with minimum effort. Corddry pointed to one of the compute sleds on the lab's workbench. "If there's something to repair on this guy, all you have to do is grab the handle and pull. There are no screws, no need for a screwdriver, and just one cable in front."

Enlarge / A "Windmill" based Facebook compute sled, in what Corddry calls the "sushi boat" form factor.

Sean Gallagher

There's no power supply on a compute sled; all the power is pulled

from the rack for the sake of efficiency. "There's a 12 volt power

connection in back," Corddry explained. "We send 12 volt regulated to

the board, so we get rid of all the complexity of having power

conversion and supply in the system. The principles of efficiency tell

you to only convert the power the minimum number of times required;

you’re losing two to five percent of the power every time you step it

down. So we bring unregulated 480 volt 3-phase straight into our rack

and have a power shelf that converts it to 12 volt that goes straight to

the motherboard. We convert it once from when it comes in from the

utility to when it hits the motherboard. A lot of data centers will

convert power three or four times: from 480 to 208, into a UPS, back out

of the UPS, into a power distribution unit, and into a server power

supply."

There's no power supply on a compute sled; all the power is pulled

from the rack for the sake of efficiency. "There's a 12 volt power

connection in back," Corddry explained. "We send 12 volt regulated to

the board, so we get rid of all the complexity of having power

conversion and supply in the system. The principles of efficiency tell

you to only convert the power the minimum number of times required;

you’re losing two to five percent of the power every time you step it

down. So we bring unregulated 480 volt 3-phase straight into our rack

and have a power shelf that converts it to 12 volt that goes straight to

the motherboard. We convert it once from when it comes in from the

utility to when it hits the motherboard. A lot of data centers will

convert power three or four times: from 480 to 208, into a UPS, back out

of the UPS, into a power distribution unit, and into a server power

supply."The efficiency continues within the design of the cooling fans in each compute sled. "These guys are incredibly efficient," Corddry said. "It has nice big fan blades that turn slowly. It only takes three or four to move air through this guy to keep it cool compared to a traditional 1U server, which can be 80 to 100 watts."

Enlarge /

Facebook's homebrew database server design, based on Windmill

motherboards, carries power supplies for high-availability. It's

replacing the last "vanity" hardware in Facebook's inventory.

Sean Gallagher

Facebook's hardware engineering team came up with a solution using Windmill motherboards, adding a power supply kit "to allow us to run off our high availability dual feed power," Corddry said. "Normally, this design would actually have a single power supply in the back and two motherboards."

While the majority of Facebook's servers run off a single power feed—"the power goes out, the generator kicks in, and we're OK," said Corddry—the database servers need extra power protection to prevent corruption caused by an outage. So the servers that support Facebook's User Database and other big databases need to have redundant power.

The 30-terabyte storage drawer

Enlarge /

The "Open Vault" storage sled, with one of its two drawers of drives

extended. The drawers bend down for easy access to individual drives.

Sean Gallagher

Open Vault is a "tray format full of hard drives," Corddry said. "When you're doing high density storage—and we do a lot of storage, as you can imagine—a lot of what you want to do is fill the volume of the enclosure."

The Open Vault unit does just that. Practically all its enclosed space is taken up by 30 hard drives, configured in two separate pullout units of 15 drives each.

"Drives don't draw a lot of power or put off a lot of heat," Corddry explained. "You don't run out of power or cooling first—you run out of space. So we designed something with as little extra structure as possible that would use the full volume of the rack."

Each individual drive can be hot-swapped. The trays of drives in the Open Vault are mounted on hinges with friction springs. "If you put this in a top of a rack, in this sort of tray configuration, it's really hard to reach over and get this rear hard drive," Corddry demonstrated. But the springs and hinges allow the whole tray to be bent down for access while the drives are still spinning. Corddry said the hinges have made a big difference in serviceability of Facebook's storage.

For now, Facebook's storage arrays are all connected to servers via a Serial Attached SCSI (SAS) interface. This means that they need to be tethered to a compute node via a PCI adapter. But Facebook is moving toward making all of its storage accessible over the network to reduce the cabling complexity further—and not by using a storage area network protocol like ISCSI.

"Where we want to take this is putting a micro server on the storage node and attaching it via 10-gigabit Ethernet, or some other in-rack interconnect—and getting rid of the complex node-to-node cabling," said Corddry. "So every node has an Ethernet attachment and nothing else." All hits on storage would then be through a software call instead of requiring direct storage access. That frees compute nodes from having to drive disk I/O.

It’s a similar approach to what Facebook is moving toward with its flash storage and large in-memory storage sleds. Those are based on compute nodes to provide low-latency access for applications over the network in-rack. "Most of our solid-state storage is PCI based, so we just stick it in the compute node," said Corddry. "The compute sled has a PCI riser in it, so you can put a few PCI Express cards in. We find putting flash on a disk interface is just a mismatch of technologies, underutilizing its speed."

Facebook's data needs tend to fall toward the extremes of the input-output spectrum. There are things like Haystack (the store of photos) that have massive storage requirements but a relatively low level of input and output relative to the total amount of storage required. These are "things that work well on spinning disk," Corddry said. "And then there are things that work very well on solid-state storage, with high I/O operations per terabyte. We don't have much in the middle that would benefit from SATA-based SSDs or high-RPM spinning disks."

Network’s next

Enlarge /

Fully configured Open Racks with "professional" cabling show off

Facebook's architecture—network on top, with layers of compute, power,

and storage.

The open networking effort at OCP is led by Facebook network engineering team lead Najam Ahmad. Its primary goal is to create a specification for an open source, operating system-agnostic network switch that will go in the top of the OCP rack design. You'd think there might be some resistance from networking companies to the idea of giving up their vertical lock-in, but Corddry said Facebook has experienced "pretty good buy-in." So far, Broadcom, Intel, VMware, Big Switch Networks, Cumulus Networks, Netronome, and OpenDaylight all have signed on for the effort.

On June 19, Ahmad and Facebook Vice President of Infrastructure Engineering Jay Parikh announced how Facebook plans to use the switches that come out of that effort. Facebook's network team created a fabric networking architecture based on 10-gigabit-per-second Ethernet. The OCP switches' openness will allow Facebook to turn the switches into components of the overall fabric.

"There will be different sizes of switches," Ahmad said at GigaOm's Structure Conference. "The first one we’re starting in a pretty simple, basic, top of rack type environment, but then we’ll have the fabric switches and the spine switches which will form the fabric itself. They’re essentially cogs in that fabric, devices that we’re building. The operating system will allow us to have better programmability and configuration management and things of that nature, so we can use a lot of the knowledge base and expertise we have from the Linux world from the software side of the world."

Facebook's networking needs outstrip many corporate networks' requirements, as Parikh explained. "Our traffic going across from machine to machine far exceeds the traffic that’s going out from machine to user," he said. "I think our ratio roughly is around 1000:1, so there are 1000 more bytes sent out between our data center, across our data center, than there are going from machines out to users. There is a huge amplification of traffic that is generated and necessary for the real-time nature of our application."

Facebook is looking at technologies for the next step, when copper cabling (regardless of the fabric architecture) is no longer enough. "While 10 G is more than enough for us today, we think within the next few years there's going to be a need for 40 or 100 gigabit technologies," Corddry said. "Optical is great if you sort of get beyond the bandwidth constraints of copper. We haven't yet exceeded the capabilities of copper, but we think that in a couple of years we could."

One route to disaggregating the server and leveraging optical capabilities could be silicon photonics. It's a technology that would allow 100 gigabit-per-second connections over optical connections being developed by Intel and IBM. "We are looking at a lot of the optical interconnect stuff," Corddry said. "We're looking at Intel's silicon photonics stuff—they're part of OCP, and they have contributed their connector (to the OCP open source)."

But Facebook is also keeping its options open, seeking something that will be both simple to implement and not "esoteric or proprietary such that it locks us into a higher cost, more proprietary path," Corddry said. "So we're looking for that commodity high-speed interconnect, whether it's optical or copper."

Going viral

The OCP Hackathons and other events elevate the do-it-yourself ethic around the open hardware efforts at Facebook. Results are coming out of them, like hardware that monitors data in real-time coming from of a car's diagnostic port. And these results don't necessarily have anything to do with data centers. Just as Raspberry Pi hacks and Arduino hacks are giving do-it-yourself hardware developers tools an unexpected range of innovations, the open designs and interfaces coming out of OCP could find their way to all sorts of places. Not to mention the fact that they often result in commodity hardware components.When I asked Corddry about what the reach of Facebook's hardware efforts might be beyond its data center walls, he didn't hesitate. "The principals we follow—of efficiency and vanity free hardware—would work anywhere."

But the hardware being designed through OCP and built by Facebook aren't engineered with that in mind, he added. "Right now we've been focused on data center computing, and my team certainly hasn't been looking at any non-data center applications. The hardware is designed for data center environments and to solve that problem set. You take a little bit of a risk of making hardware to be a jack-of-all-trades. If you design it to work in a pedestal in an office, in a back room at a small bus, and in a data center, you usually compromise too many things."

That doesn't mean that something designed with the data center in mind won't find its way into other purposes. Power efficiency, low costs, and open interfaces are important in other applications of motherboards, for example. Sure, you can't exactly buy a "Windmill" motherboard from NewEgg yet. But sometime soon, you may be able to find something based on OCP designs a little closer to you than a Facebook data center.

No comments:

Post a Comment